文章目录

- 1.CVCUDA

- 1.1CVCUDA实例

- 1.2CMakeLists.txt的写法

- 2参考(https://blog.csdn.net/tywwwww/article/details/134319545)安装cuda, cudnn

- 3tensorrt 安装

1.CVCUDA

https://cvcuda.github.io/CV-CUDA/installation.html 是安装和使用教程

安装好之后要设置环境变量,参考https://blog.csdn.net/Deaohst/article/details/138472286

https://github.com/CVCUDA/CV-CUDA/releases 是安装文件

1.1CVCUDA实例

https://zhuanlan.zhihu.com/p/667503760 博客介绍了一个简单实例

1.2CMakeLists.txt的写法

find_package(CUDA REQUIRED)set(CMAKE_CXX_FLAGS "-Wno-deprecated-enum-enum-conversion")find_package(OpenCV)include_directories(${OpenCV_INCLUDE_DIRS}

)# opencv lize

#include_directories(/home/redpine/software/opencv420/include/opencv4)

#link_directories(/home/redpine/software/opencv420/lib)

#set(OpenCV_LIBS opencv_core opencv_highgui opencv_imgproc opencv_imgcodecs opencv_videoio opencv_video)# tag: Build crop and resize sample

#tensorrt

include_directories(/home/xx/software/TensorRT-10.5.0.18/include)

link_directories(/home/xx/software/TensorRT-10.5.0.18/lib)

#cvcuda

include_directories(/home/xx/code_cuda/cv_cuda_lib/opt/nvidia/cvcuda0/include)

link_directories(/home/xx/code_cuda/cv_cuda_lib/opt/nvidia/cvcuda0/lib/x86_64-linux-gnu)

#cuda

include_directories(/usr/local/cuda/include)

link_directories(/usr/local/cuda/lib64)

#cvcuda common

include_directories(/home/xx/code_cuda/cv_cuda_lib/samples/common)

link_directories(/home/xx/code_cuda/cv_cuda_lib/samples/common/build)add_executable(cvcuda_sample_cropandresize Main.cpp)

target_link_libraries(cvcuda_sample_cropandresize nvcv_types cvcuda cudart cvcuda_samples_common nvinfer nvjpeg ${OpenCV_LIBS})target_include_directories(cvcuda_sample_cropandresizePRIVATE ${CMAKE_CURRENT_SOURCE_DIR}/..)

2参考(https://blog.csdn.net/tywwwww/article/details/134319545)安装cuda, cudnn

前提是安装cuda 驱动,cuda runtime, cudnn

检查命令 nvidia-smi, nvcc -V, 和文件夹/usr/local/cudaxxx/bin

3tensorrt 安装

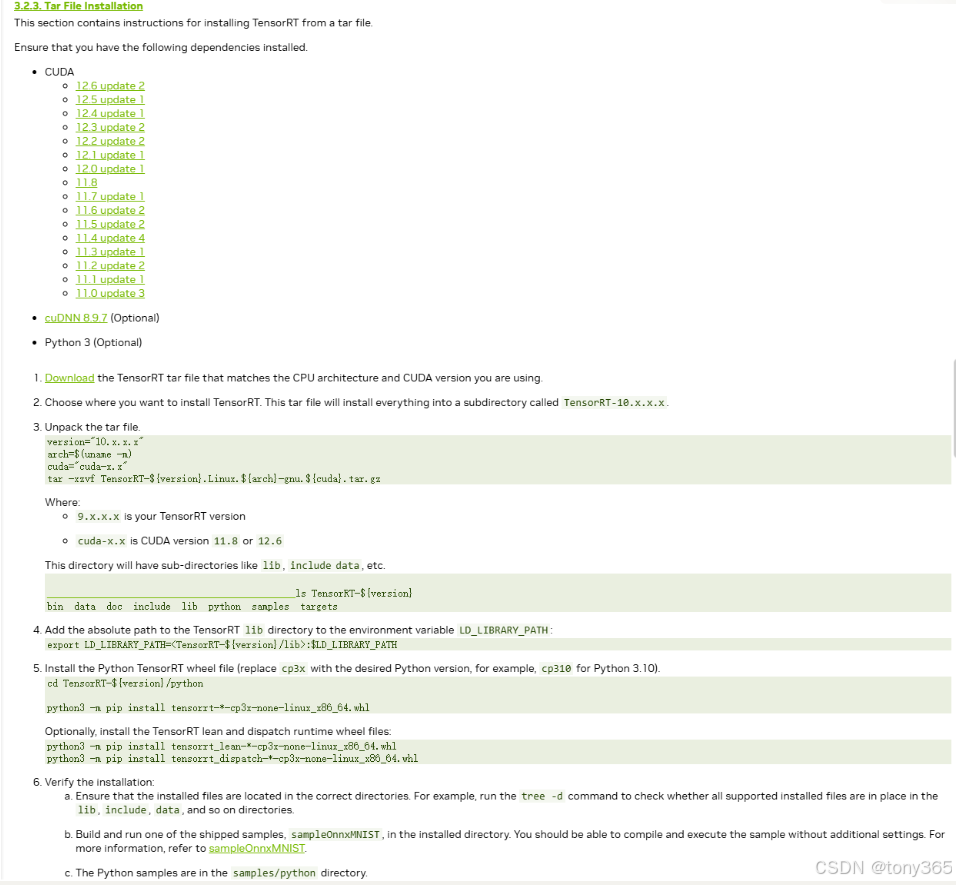

参考https://docs.nvidia.com/deeplearning/tensorrt/install-guide/index.html#downloading

下载链接:https://developer.nvidia.com/tensorrt/download

采用tar file安装的方式

本地是ubuntu系统,cuda-12.2 driver 和cuda-12.2 cuda runtime

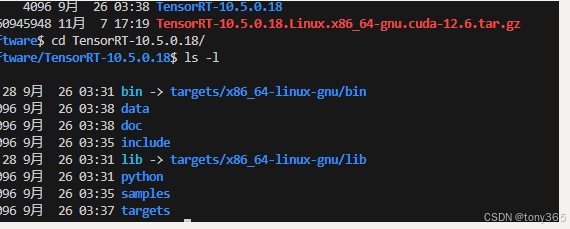

首先根据自己系统和cuda版本下载: TensorRT-10.5.0.18.Linux.x86_64-gnu.cuda-12.6.tar.gz

1)然后解压

tar -xzvf TensorRT-10.5.0.18.Linux.x86_64-gnu.cuda-12.6.tar.gz

2)然后添加环境变量:

vim ~/.bashrc

最后一行添加export LD_LIBRARY_PATH=“/home/xx/software/TensorRT-10.5.0.18/lib”:$LD_LIBRARY_PATH

source ~/.bashrc

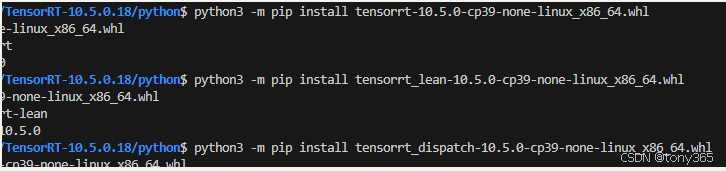

- 安装python包

- 验证c++

编译

TensorRT-10.5.0.18/samples/sampleOnnxMNIST$ make

编译后的可执行程序在 TensorRT-10.5.0.18/bin目录,直接可以运行

TensorRT-10.5.0.18/bin$ ./sample_onnx_mnist

5)验证python

实例在 TensorRT-10.5.0.18/samples/python目录下

?)

![[C#] Winform - 进程间通信(SendMessage篇)](http://pic.xiahunao.cn/nshx/[C#] Winform - 进程间通信(SendMessage篇))