文章目录

- 🚩baseline

- 💡Demo搭建!

- 💡启动Demo

- 💡转战飞桨

- 🔈启动Demo

- 🔈torch 改 paddle

- 💡二周目魔搭

- 🔈贴一份CPU版代码

🚩baseline

💡Demo搭建!

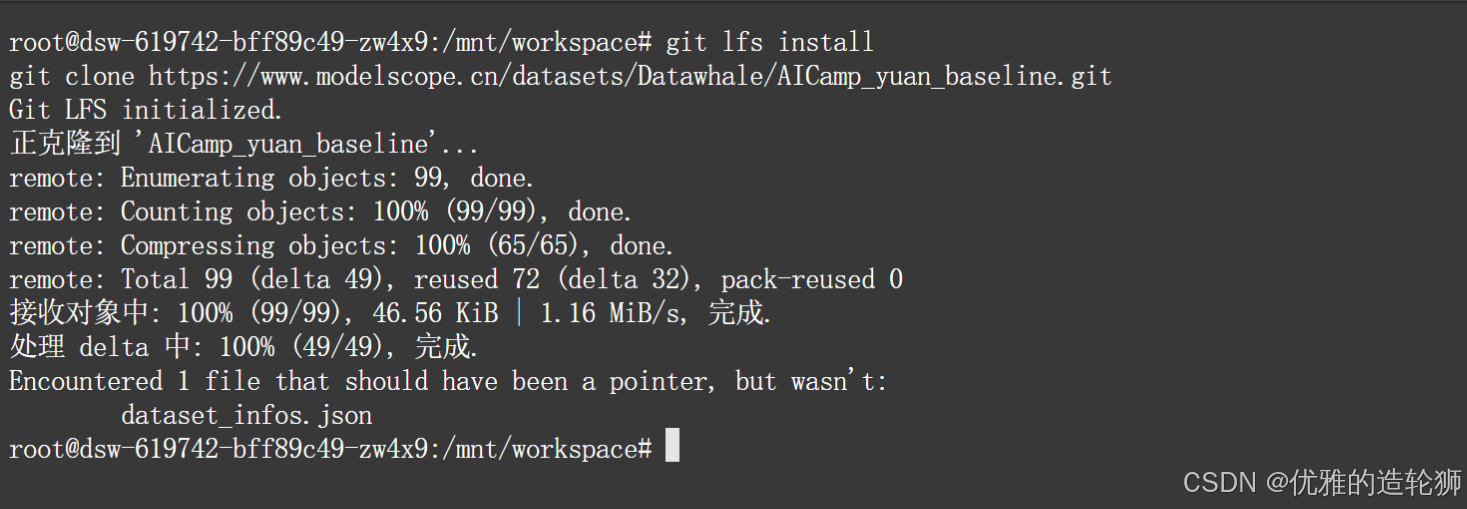

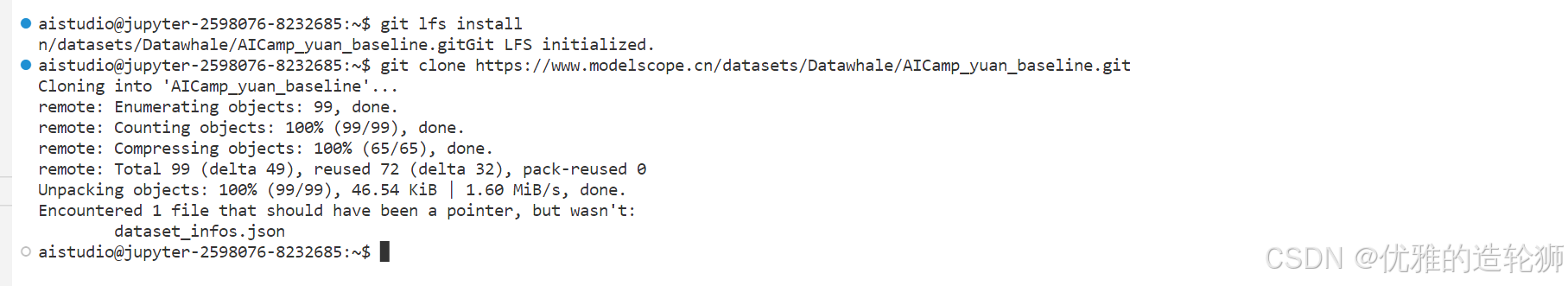

git lfs install

git clone https://www.modelscope.cn/datasets/Datawhale/AICamp_yuan_baseline.git

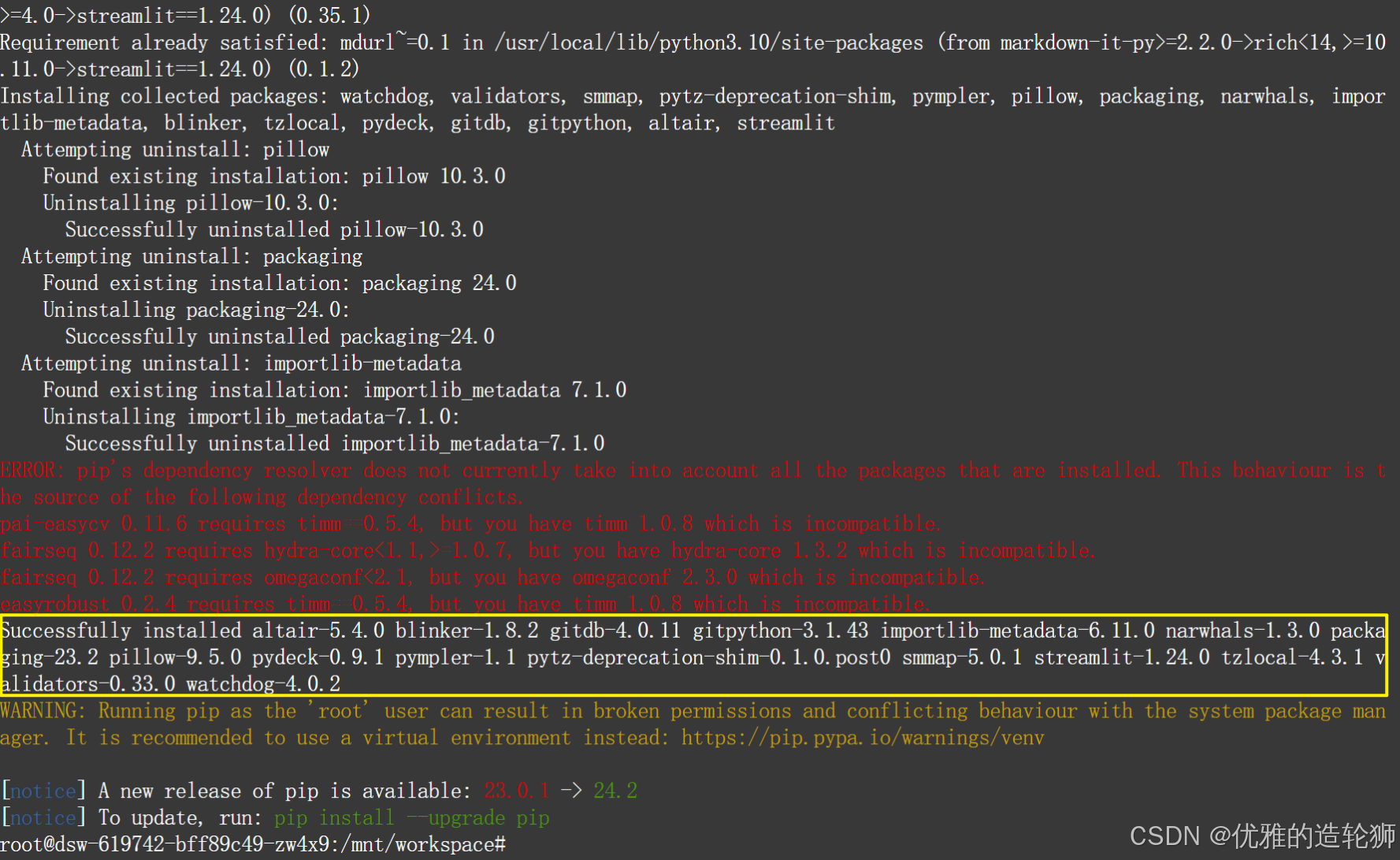

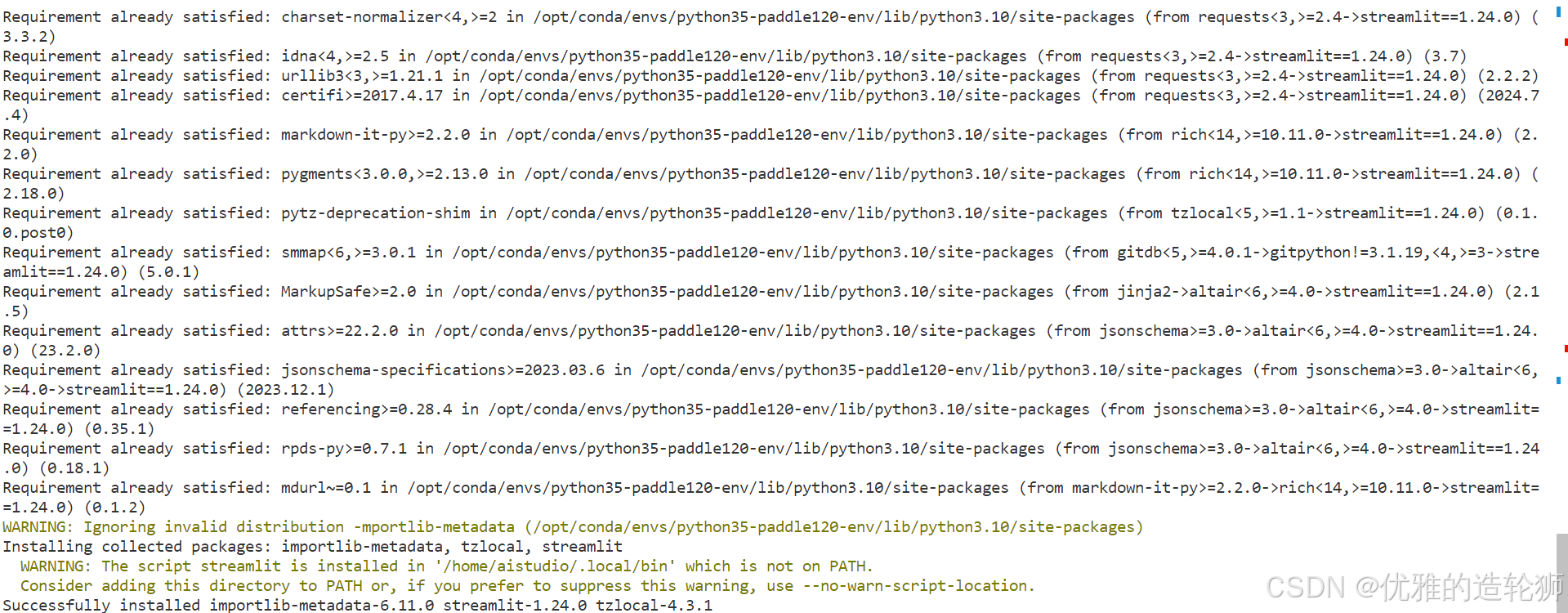

pip install streamlit==1.24.0

💡启动Demo

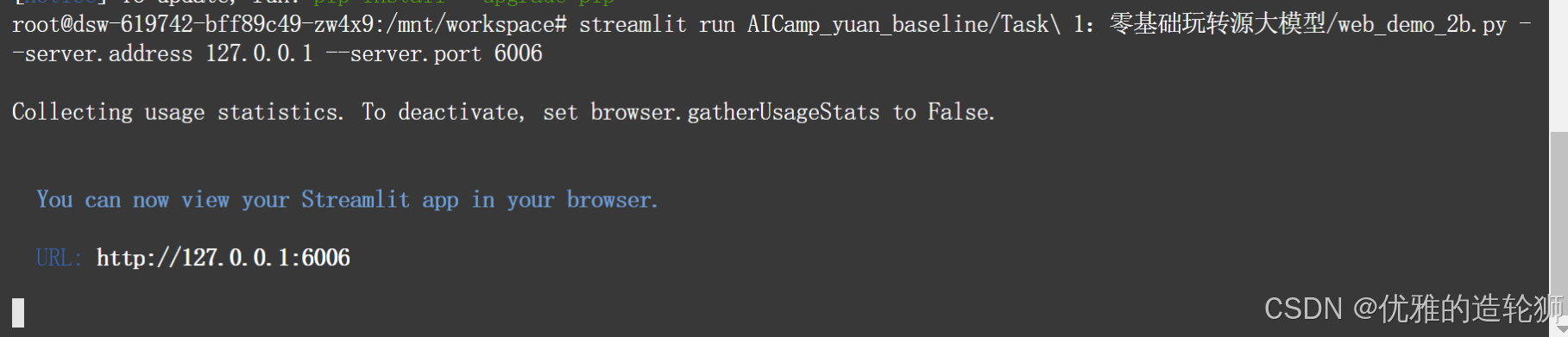

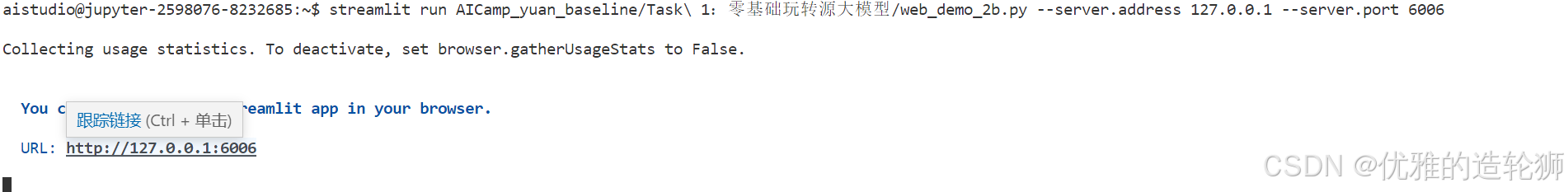

streamlit run AICamp_yuan_baseline/Task\ 1:零基础玩转源大模型/web_demo_2b.py --server.address 127.0.0.1 --server.port 6006

点击 127.0.0.1:6006

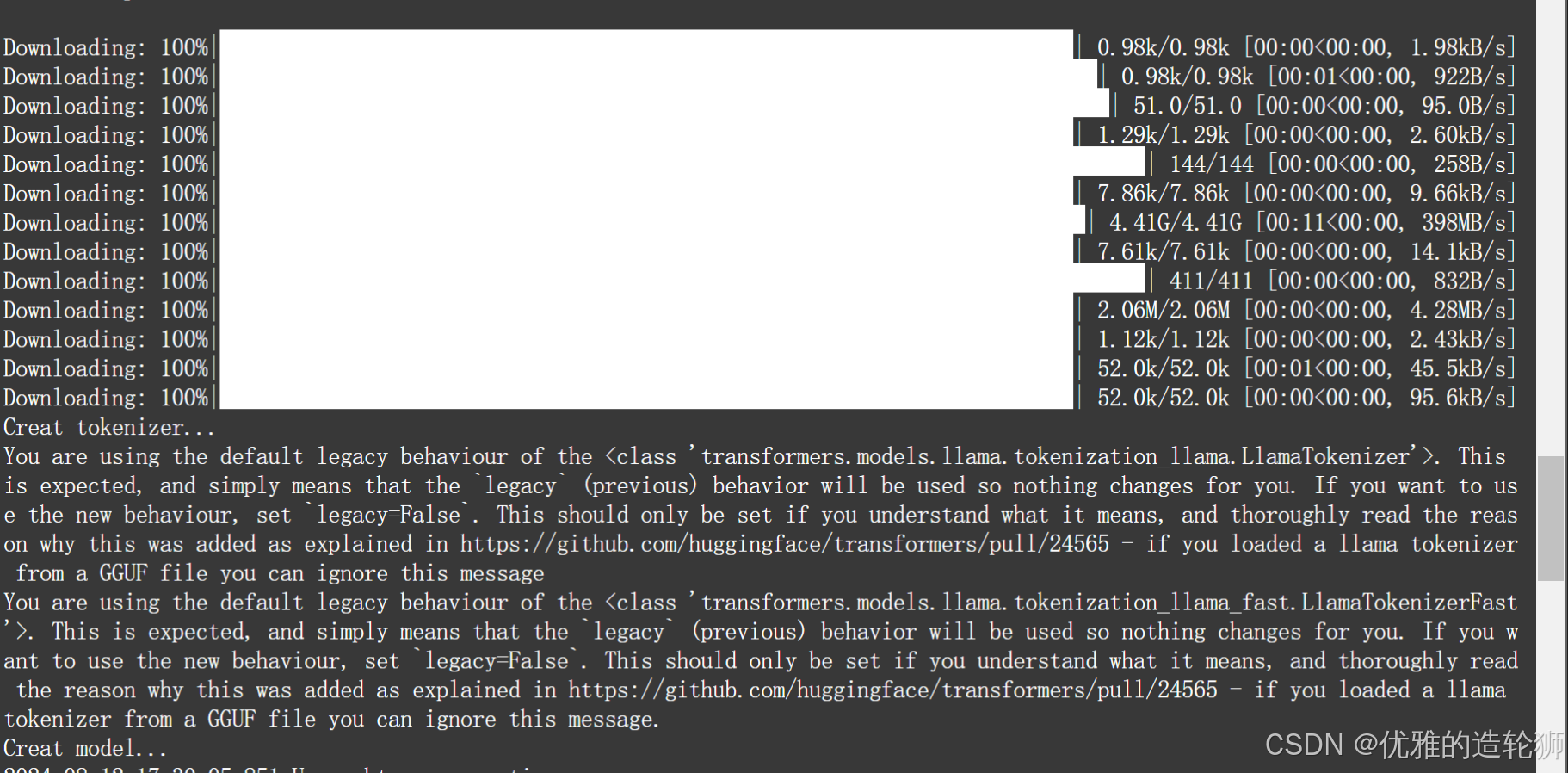

可以看到弹出一个新页面和下载数据

RuntimeError: Found no NVIDIA driver on your system. Please check that you have an NVIDIA GPU and installed a driver from http://www.nvidia.com/Download/index.aspx

Traceback:

File "/usr/local/lib/python3.10/site-packages/streamlit/runtime/scriptrunner/script_runner.py", line 552, in _run_scriptexec(code, module.__dict__)

File "/mnt/workspace/AICamp_yuan_baseline/Task 1:零基础玩转源大模型/web_demo_2b.py", line 36, in <module>tokenizer, model = get_model()

File "/usr/local/lib/python3.10/site-packages/streamlit/runtime/caching/cache_utils.py", line 211, in wrapperreturn cached_func(*args, **kwargs)

File "/usr/local/lib/python3.10/site-packages/streamlit/runtime/caching/cache_utils.py", line 240, in __call__return self._get_or_create_cached_value(args, kwargs)

File "/usr/local/lib/python3.10/site-packages/streamlit/runtime/caching/cache_utils.py", line 266, in _get_or_create_cached_valuereturn self._handle_cache_miss(cache, value_key, func_args, func_kwargs)

File "/usr/local/lib/python3.10/site-packages/streamlit/runtime/caching/cache_utils.py", line 320, in _handle_cache_misscomputed_value = self._info.func(*func_args, **func_kwargs)

File "/mnt/workspace/AICamp_yuan_baseline/Task 1:零基础玩转源大模型/web_demo_2b.py", line 30, in get_modelmodel = AutoModelForCausalLM.from_pretrained(path, torch_dtype=torch_dtype, trust_remote_code=True).cuda()

File "/usr/local/lib/python3.10/site-packages/transformers/modeling_utils.py", line 2766, in cudareturn super().cuda(*args, **kwargs)

File "/usr/local/lib/python3.10/site-packages/torch/nn/modules/module.py", line 915, in cudareturn self._apply(lambda t: t.cuda(device))

File "/usr/local/lib/python3.10/site-packages/torch/nn/modules/module.py", line 779, in _applymodule._apply(fn)

File "/usr/local/lib/python3.10/site-packages/torch/nn/modules/module.py", line 779, in _applymodule._apply(fn)

File "/usr/local/lib/python3.10/site-packages/torch/nn/modules/module.py", line 804, in _applyparam_applied = fn(param)

File "/usr/local/lib/python3.10/site-packages/torch/nn/modules/module.py", line 915, in <lambda>return self._apply(lambda t: t.cuda(device))

File "/usr/local/lib/python3.10/site-packages/torch/cuda/__init__.py", line 293, in _lazy_inittorch._C._cuda_init()

说明需要GPU版本的环境

💡转战飞桨

git lfs install

git clone https://www.modelscope.cn/datasets/Datawhale/AICamp_yuan_baseline.git

pip install streamlit==1.24.0 --user

🔈启动Demo

streamlit run AICamp_yuan_baseline/Task\ 1:零基础玩转源大模型/web_demo_2b.py --server.address 127.0.0.1 --server.port 6006

aistudio@jupyter-2598076-8232685:~$ curl ifconfig.me

还是不行用回另外一个模式JuypterLab

streamlit run AICamp_yuan_baseline/baseline/web_demo_2b.py --server.address 127.0.0.1 --server.port 6006

发现忘记转换代码了

🔈torch 改 paddle

参考

代码自动转换工具-使用文档-PaddlePaddle深度学习平台

最后发现只能在下面使用这个代码 ,应该github搞下来应该是一样的

from modelscope import snapshot_download

model_dir = snapshot_download('IEITYuan/Yuan2-2B-Mars-hf', cache_dir='./')

我们要的大模型下载不下来

还是得用回魔搭

而且飞桨的streamlit不太好用

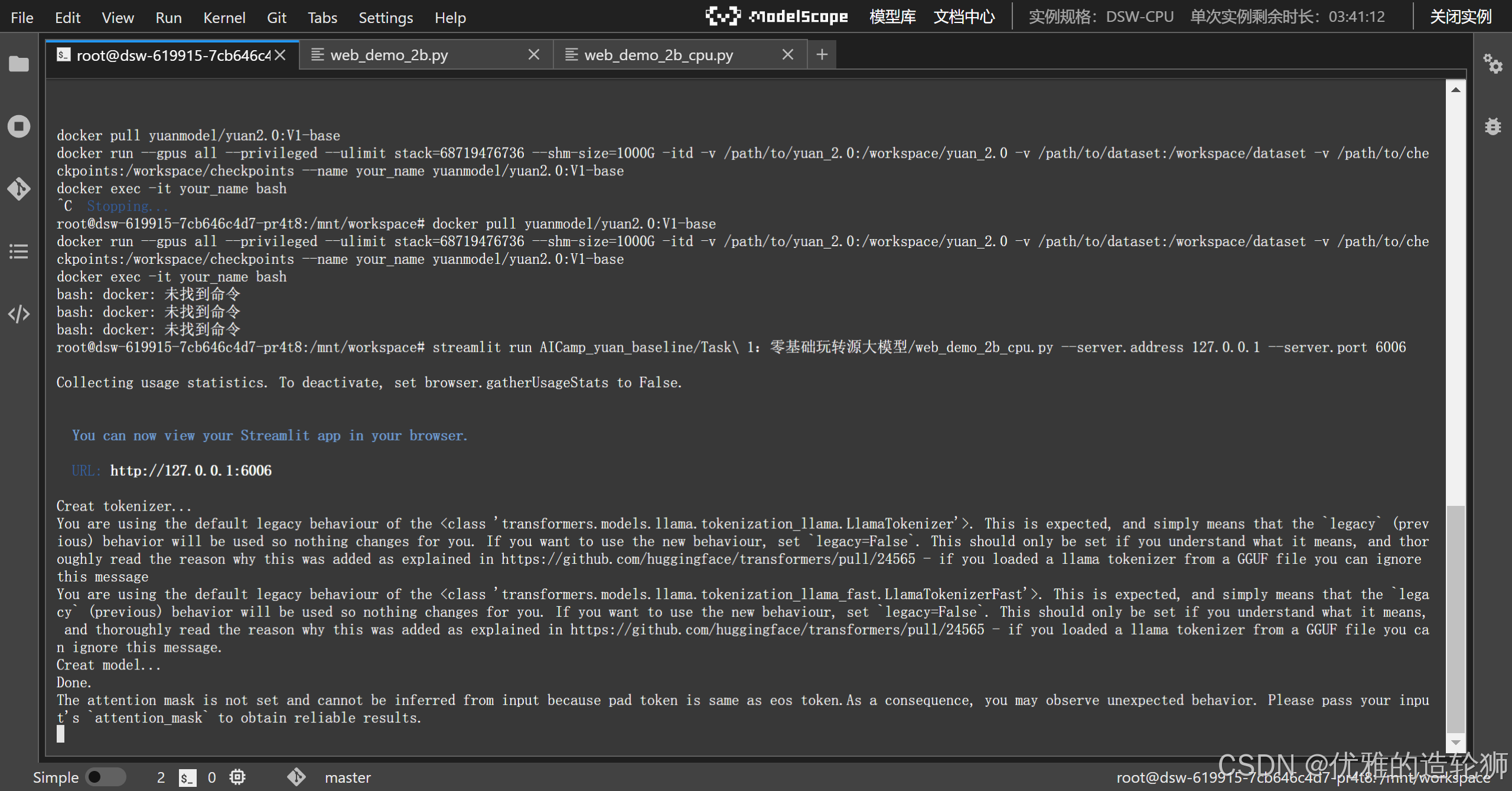

💡二周目魔搭

git lfs install

git clone https://www.modelscope.cn/datasets/Datawhale/AICamp_yuan_baseline.git

pip install streamlit==1.24.0

streamlit run AICamp_yuan_baseline/Task\ 1:零基础玩转源大模型/web_demo_2b.py --server.address 127.0.0.1 --server.port 6006

win版 CPU部署

仅支持HuggingFace模型方式部署

Step 1: 通过修改HuggingFace模型配置文件手动关闭flash_atten,具体如下:将config_cpu.json

内容替代config.json, 将yuan_hf_model_cpu.py 内容替代yuan_hf_model.pyStep 2: 根据 Hugging Face 模型推理api部署 ,获取推理服务的 request

url:http://127.0.0.1:8000Step 3: 根据 源Chat部署文档 完成源Chat的部署

Step 4: 在浏览器中访问链接:http://localhost:5050,验证是否部署正确

详细部署方案可以参考 源2.0 与 源Chat

🔈贴一份CPU版代码

魔搭版

# 导入所需的库

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

import streamlit as st# 创建一个标题和一个副标题

st.title("💬 Yuan2.0 智能编程助手")# 源大模型下载

from modelscope import snapshot_download

model_dir = snapshot_download('IEITYuan/Yuan2-2B-Mars-hf', cache_dir='./')

# model_dir = snapshot_download('IEITYuan/Yuan2-2B-July-hf', cache_dir='./')# 定义模型路径

path = './IEITYuan/Yuan2-2B-Mars-hf'

# path = './IEITYuan/Yuan2-2B-July-hf'# 定义模型数据类型

# 使用torch.float32,适用于CPU

torch_dtype = torch.float32# 定义一个函数,用于获取模型和tokenizer

@st.cache_resource

def get_model():print("Creat tokenizer...")tokenizer = AutoTokenizer.from_pretrained(path, add_eos_token=False, add_bos_token=False, eos_token='<eod>')tokenizer.add_tokens(['<sep>', '<pad>', '<mask>', '<predict>', '<FIM_SUFFIX>', '<FIM_PREFIX>', '<FIM_MIDDLE>','<commit_before>', '<commit_msg>', '<commit_after>', '<jupyter_start>', '<jupyter_text>','<jupyter_code>', '<jupyter_output>', '<empty_output>'], special_tokens=True)print("Creat model...")model = AutoModelForCausalLM.from_pretrained(path, torch_dtype=torch_dtype, trust_remote_code=True)print("Done.")return tokenizer, model# 加载model和tokenizer

tokenizer, model = get_model()# 初次运行时,session_state中没有"messages",需要创建一个空列表

if "messages" not in st.session_state:st.session_state["messages"] = []# 每次对话时,都需要遍历session_state中的所有消息,并显示在聊天界面上

for msg in st.session_state.messages:st.chat_message(msg["role"]).write(msg["content"])# 如果用户在聊天输入框中输入了内容,则执行以下操作

if prompt := st.chat_input():# 将用户的输入添加到session_state中的messages列表中st.session_state.messages.append({"role": "user", "content": prompt})# 在聊天界面上显示用户的输入st.chat_message("user").write(prompt)# 调用模型prompt = "<n>".join(msg["content"] for msg in st.session_state.messages) + "<sep>" # 拼接对话历史inputs = tokenizer(prompt, return_tensors="pt")["input_ids"]# 由于模型和数据都在CPU上,不需要.cuda()调用outputs = model.generate(inputs, do_sample=False, max_length=1024) # 设置解码方式和最大生成长度output = tokenizer.decode(outputs[0])response = output.split("<sep>")[-1].replace("<eod>", '')# 将模型的输出添加到session_state中的messages列表中st.session_state.messages.append({"role": "assistant", "content": response})# 在聊天界面上显示模型的输出st.chat_message("assistant").write(response)

啊哈起码是能运行了

CPU的很慢很慢很慢哈,但是也是可以看到效果了(没算力的无奈╮(╯▽╰)╭)